About the course

Artificial Intelligence in healthcare does not merely optimise processes — it influences diagnoses, treatment pathways, and patient safety.

This capstone is the culminating stage of the C-Lab Institute AI Responsibility pathway. Participants are placed in a healthcare institution that has deployed a clinical AI system to assist with diagnostic decision-making.

Initial results showed efficiency gains.

However, new evidence suggests possible bias across demographic groups, incomplete validation studies, and regulatory review concerns.

- Media scrutiny is increasing.

- Regulators are requesting documentation.

- Clinicians are divided in opinion.

The hospital board must determine whether the system should continue, be restricted, redesigned, or suspended.

This is not a technical audit.

It is a governance decision involving patient safety and public trust.

In healthcare, AI responsibility is not optional.

It is a clinical obligation.

Technology may assist decision-making —

but governance protects patients.Without governance, innovation can harm.

With governance, innovation heals.

What you'll learn

- Risk Analysis: Identify clinical safety threats, demographic biases, and gaps in AI validation studies.

- Regulatory Navigation: Evaluate institutional exposure to regulatory requirements and medical liability.

- Governance Design: Construct oversight committees with clear accountability between clinical and technical staff.

- Operational Safeguards: Implement "human-in-the-loop" protocols and adverse event reporting systems.

- Strategic Decision-Making: Formulate defensible recommendations to continue, restrict, or pause AI deployment.

- Compliance Roadmapping: Develop 12-month monitoring plans to ensure long-term AI transparency and safety.

Requirements

- Programme Alignment: C-Lab AI Leadership Pathway – Responsibility Stage

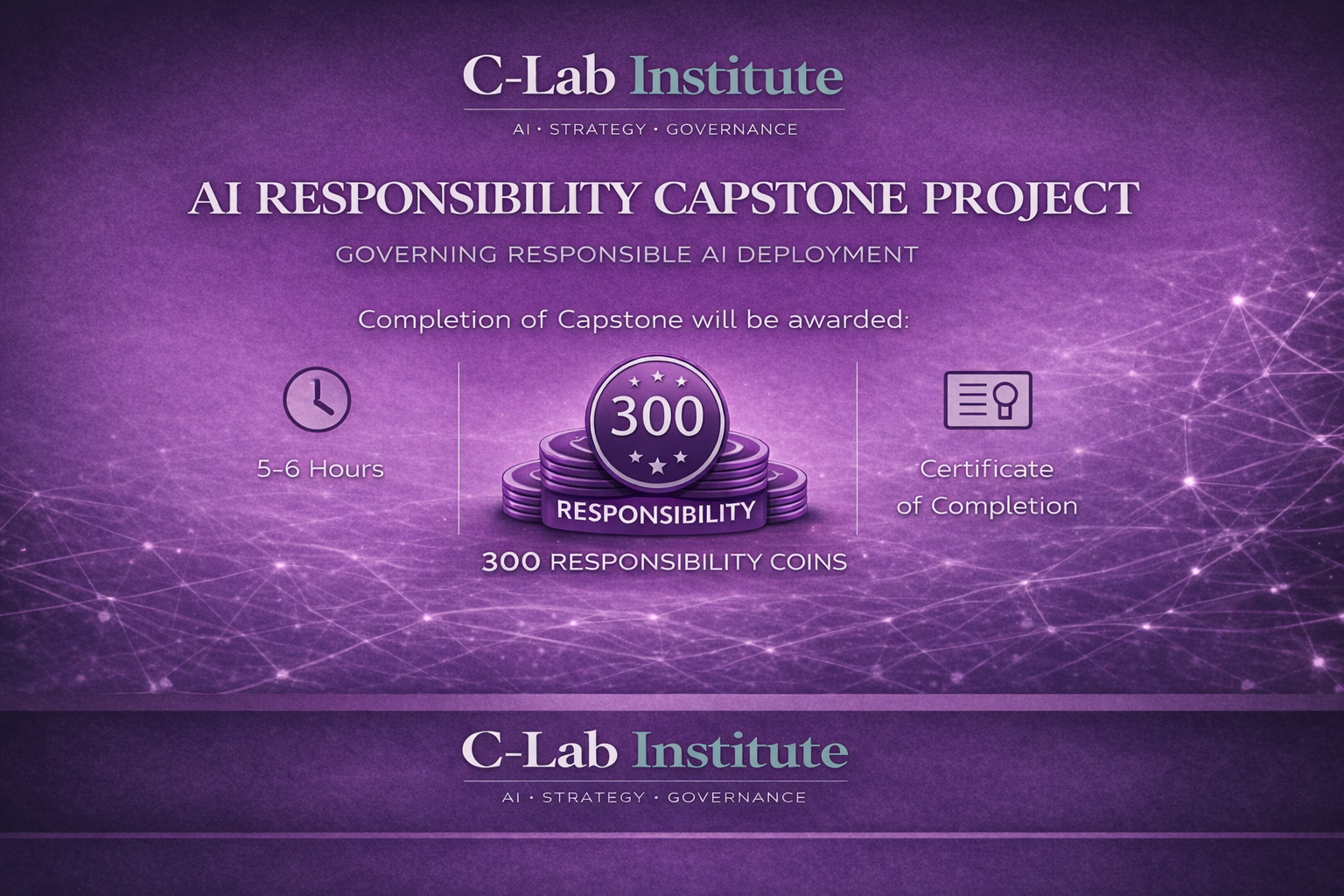

- Coin Allocation: Responsibility Coins

- Prerequisite: Completion of AI Readiness for Leaders

Course content

Capstone Objective

Participants will act as Chair of the Clinical AI Oversight Committee and prepare a formal Regulatory & Board Advisory Memorandum recommending a responsible course of action.

The capstone evaluates the leader’s ability to:

• Identify patient safety and clinical risk

• Assess validation, bias, and model performance concerns

• Evaluate regulatory compliance exposure

• Design oversight, audit, and accountability structures

• Propose monitoring and escalation mechanisms

• Make a defensible deployment / pause recommendation

Award

🏅 300 Responsibility Coins

📜 C-Lab Institute AI Responsibility Capstone Certificate

Primary 3R Dimension: Responsibility (Do)

Progression Path: Leader → Fellow

Enrolment options

AI Responsibility Capstone: Clinical AI Under Regulatory Scrutiny - Healthcare Case Study

Artificial Intelligence in healthcare does not merely optimise processes — it influences diagnoses, treatment pathways, and patient safety.

This capstone is the culminating stage of the C-Lab Institute AI Responsibility pathway. Participants are placed in a healthcare institution that has deployed a clinical AI system to assist with diagnostic decision-making.

Initial results showed efficiency gains.

However, new evidence suggests possible bias across demographic groups, incomplete validation studies, and regulatory review concerns.

- Media scrutiny is increasing.

- Regulators are requesting documentation.

- Clinicians are divided in opinion.

The hospital board must determine whether the system should continue, be restricted, redesigned, or suspended.

This is not a technical audit.

It is a governance decision involving patient safety and public trust.

In healthcare, AI responsibility is not optional.

It is a clinical obligation.

Technology may assist decision-making —

but governance protects patients.Without governance, innovation can harm.

With governance, innovation heals.

- Enrolled students: There are no students enrolled in this course.